We continue to hear from customers that they see the immense value and importance of artificial intelligence (AI), generative AI, vector search, and edge computing. These technologies are becoming more critical to collect data and provide actionable insights. At the same time, there continues to be a rapid expansion of IoT devices, with decisions being made in real-time based on data streaming from sensors.

Many organizations are on the cusp of fully diving into integrating these technologies so that they can provide an enhanced customer experience. They are using AI as a means to accomplish goals for their applications – ensuring they are always fast, available, able to interact in real-time, and engage in context.

Companies have excitement and hesitancies around AI

While excitement over the possibilities of AI is palpable, companies are taking a cautious approach. These organizations have hesitancies over data security, compliance, and legal concerns around AI usage. They continue to evaluate the best way to leverage and govern AI-powered applications. While there are still certain privacy, security, and accuracy aspects to work out, it’s clear the potential possibilities that can be unlocked are too intriguing to pass up.

AI requires reliable data

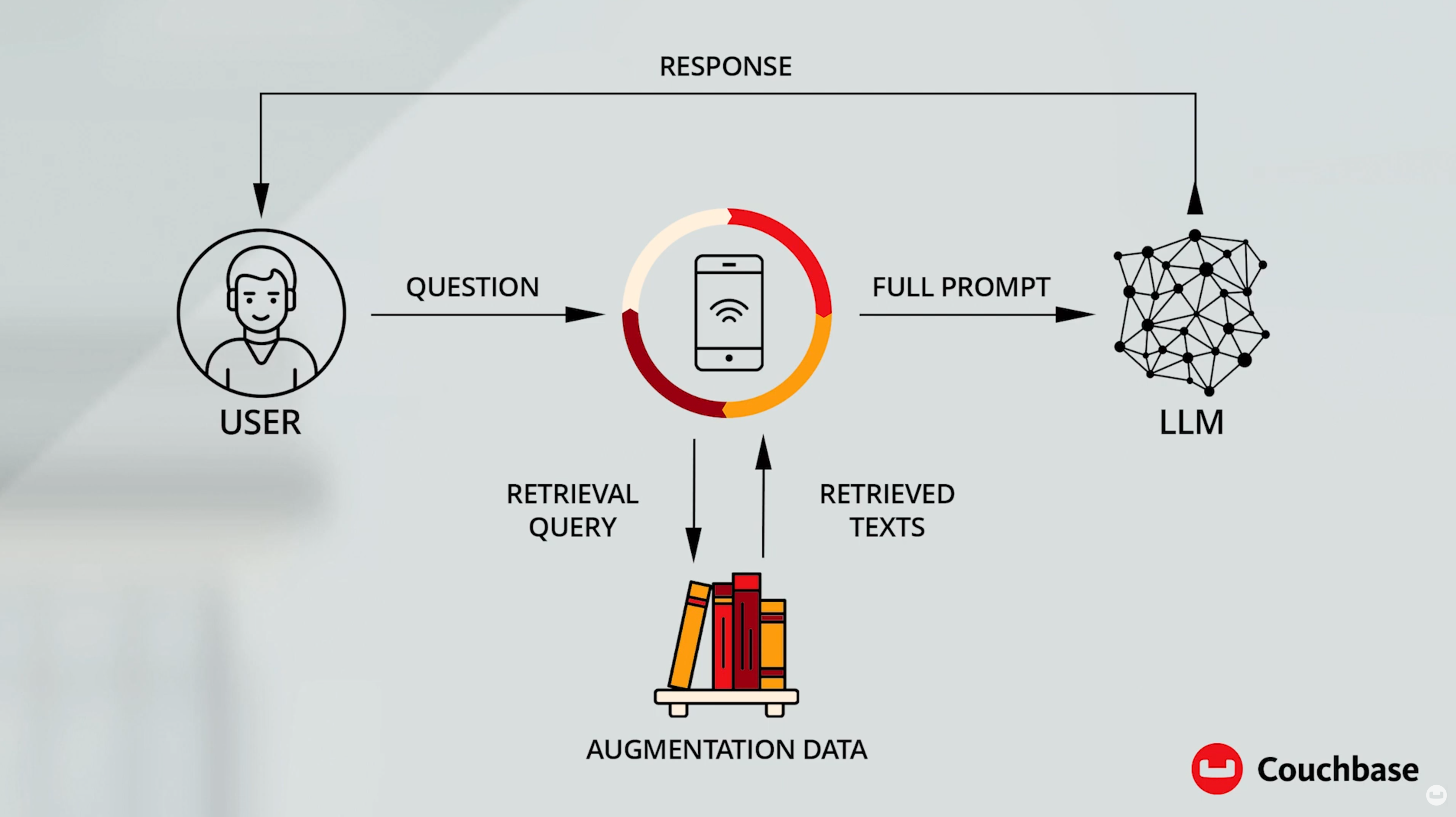

Data is the lifeblood for AI model training. The more current, clean, and reliable data that can be provided to LLMs, the more trustworthy the responses will be. And the more data that you give AI models, the more precise they become. How and where you process data has implications on the speed and availability of the AI-powered applications.

Utilizing edge computing to improve speed and availability

If you store and process data in the cloud, you could have challenges with speed and availability for mobile devices. If it’s an easy line of sight back to access points it can be smooth, but going over cellular/5G can be a pain if trying to do video and analytics at the same time. It requires significant bandwidth to go from the cloud to the edge and back, especially in areas where connectivity is an issue such as crowded stadiums, cruise ships, airplanes, and for field workers.

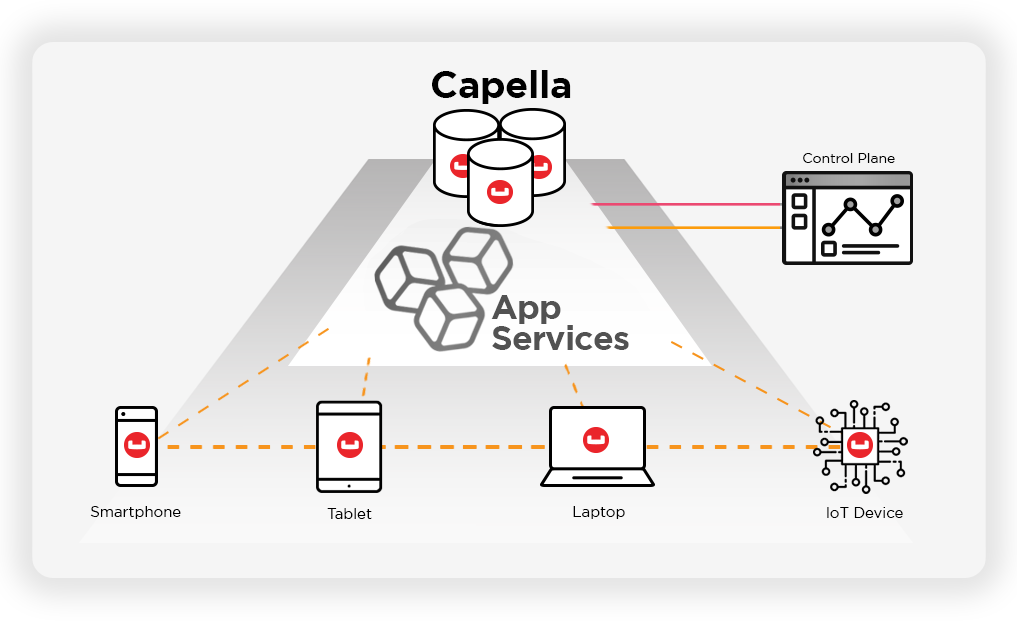

Couchbase provides a cloud to edge database, along with vector search – to integrate directly with AI models at the edge. This gives developers the ability to store and process data in the cloud (Capella) and on devices with an embedded database (Couchbase Lite). The Couchbase database can be in close proximity to where you are going to use it, such as a retail store, distribution center, or hospital. Both support vector search and automatically synchronize between devices and up to the cloud.

Using the fundamentals of edge computing to process the data locally means much less dependency on the cloud and internet connection. Putting the database on the device itself (phone, tablet, kiosk, POS, IoT devices), helps customers reach 100% availability and guaranteed response times for vector search.

Examples of vector search in action

One example of vector search in action is at the grocery store self checkout when dealing with unscannable items. The current process is you type in the item, select the right one on the screen, and then bag it. With vector search, the ability to find things through visual semantic search flips that paradigm. You would set the item on the counter, the camera scans and recognizes it, and then you bag it. This takes a 10-20 second process down to 2 seconds. Those seem like small numbers at first, but when processing long lines of customers at grocery stores or hundreds of passengers coming into an airport or a hospital those numbers can add up in a hurry.

Vector search and image recognition on mobile devices will also have a huge impact. Doing item lookups based on the item itself will work much better than barcodes. While barcodes can fade, wrinkle, and be tampered with over time – vector search recognizes the item itself and speeds up the process which is better for both customers and retailers.

Another example of this in action is with insurance claims. A policyholder could take a picture of damage that happened to their car and quickly find out what parts will need to be repaired and what body shop it can be taken to. There are countless potential use cases for vector and image search.

Vectors from natural language

LLMs are great at producing vectors from natural language. Audio provides another mechanism to inject more context for what the vector search user is trying to get at, which improves accuracy. Search and queries are fairly strict, but vectors provide more flexibility.

An example of this in action is instead of searching for a specific item, you could say: “I’m making dinner tonight, I have a taste for Italian food, two adults and three kids are coming over, what would you recommend I make with the ingredients I have on hand?” You can be much more detailed and precise when you can naturally talk to the system via audio with LLMs and vector search.

Conclusion

Vector search and edge computing are truly transformative capabilities. Edge infrastructure will be an important part of many solutions as the need for speed and localized processing increases. IT leaders must work with business leaders to help them visualize and understand what is possible. The ability to conceive of potential use cases and socialize them in the organization is a critical role for IT and is hugely beneficial to the organization.

-

- Learn more about Couchbase and vector search

- Start using Capella for free and try out vector search today