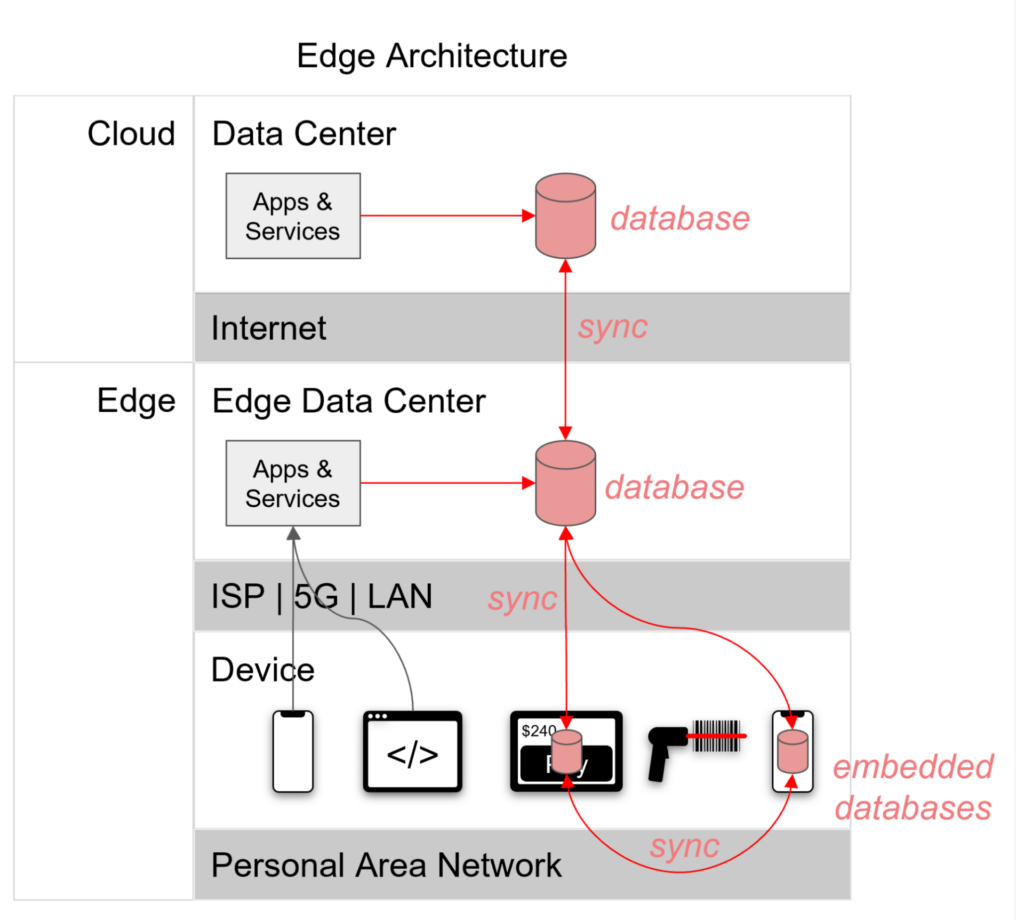

Edge computing is a technical architecture that extends data processing from the cloud to the edge, moving it closer to the point of interaction, including onto mobile devices. From the database perspective, a typical architecture includes a database in the cloud, a database at the edge in an edge data center and embedded databases running within apps on edge devices like phones and tablets, all linked via data synchronization for consistency. Modern mobile database platforms combine all of these capabilities.

This architecture provides four distinct advantages for applications:

-

- Speed — By processing data closer to the point of interaction, the distance it has to travel is reduced, dramatically reducing app latency.

- Resilience — By processing data at the edge, you reduce dependencies on an inherently unreliable internet for data, reducing app downtime. If any upstream layer of the architecture is disrupted, downstream applications are not affected at all.

- Data governance — With edge computing, sensitive data never has to leave the edge.

- Bandwidth efficiency — By distributing data storage to the edge, you reduce bandwidth use and egress costs of pulling data from the cloud.

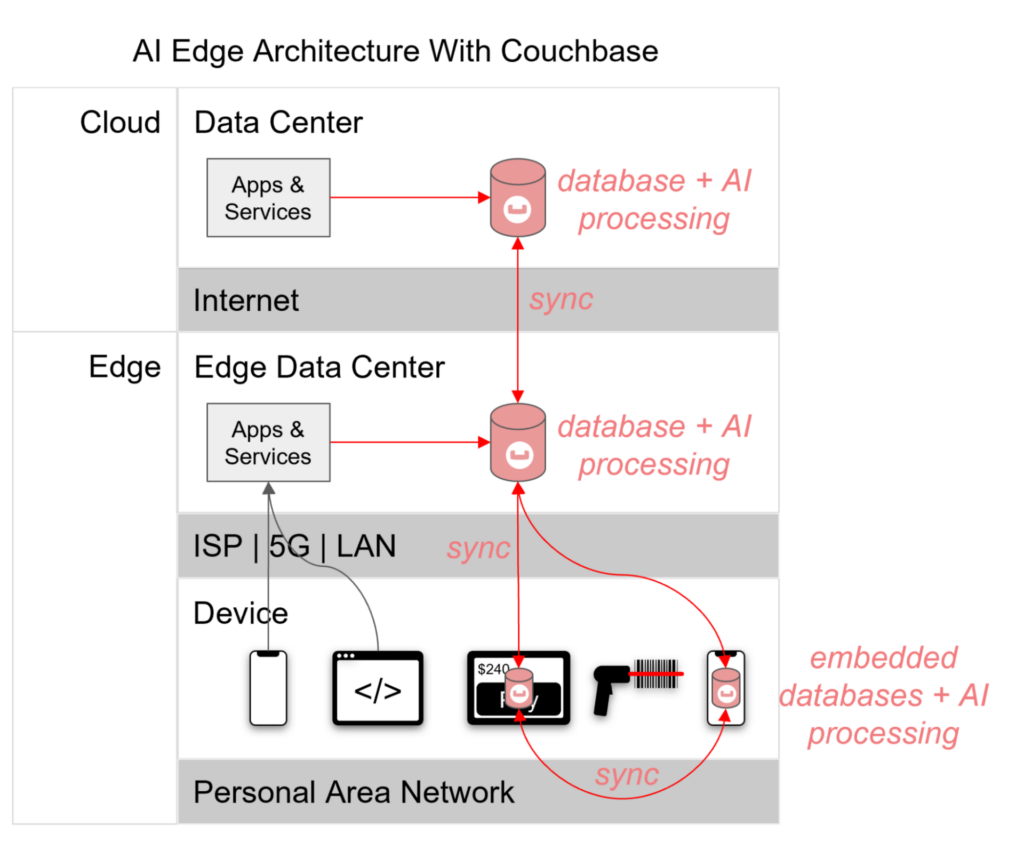

When applied to an AI system, the edge computing advantages can greatly accelerate and amplify AI capabilities, both in the training of learning algorithms, as well as in practical application of models.

‘Hybrid’ AI Is Both Centralized and Decentralized

A recent Forbes article talks about the notion that the “future of AI is hybrid,” meaning AI needs the ability to process data both in the cloud and at the edge to realize its full potential. In the article, the author states:

“AI needs somewhere powerful and stable for model training and inference, which require huge amounts of space for processing complex workloads. That’s where the cloud comes in. At the same time, AI also needs to happen fast. For it to be useful, it needs to process closer to where the action actually happens — the edge of a mobile device.”

This observation highlights the notion that LLM-powered AI is gravitationally centralized, while the creation and consumption of the data it uses and produces will be decentralized and highly distributed.

The hybrid aspect of AI described in the article can be elegantly achieved with an edge computing architecture, where the cloud, the edge data center and edge devices aren’t separate environments, per se, but are actually layers within a data-processing ecosystem linked via synchronization.

In this model, AI workloads can be processed where most appropriate — deep learning AI training happens against immense amounts of data in the central cloud, where storage and horsepower are limited only by budget, while smaller machine learning AI models can run at the edge directly on edge devices, where they can do things like make on-the-spot recommendations to a user based on local data and current situation.

What’s needed to facilitate the hybrid AI concept is a database built for edge computing that also supports AI.

The Main Ingredient of AI: Data

Regardless of the techniques or algorithms used, the backbone and most necessary resource for achieving AI is data. Data is fuel for machine learning, both historic for context and precedence, and in real time for situational awareness. But it’s much more than simply acquiring data. Where you store and process that data has huge implications on the success (or failure) of AI-based systems, so careful consideration is required when designing an AI architecture.

AI at the Edge with a Mobile Database

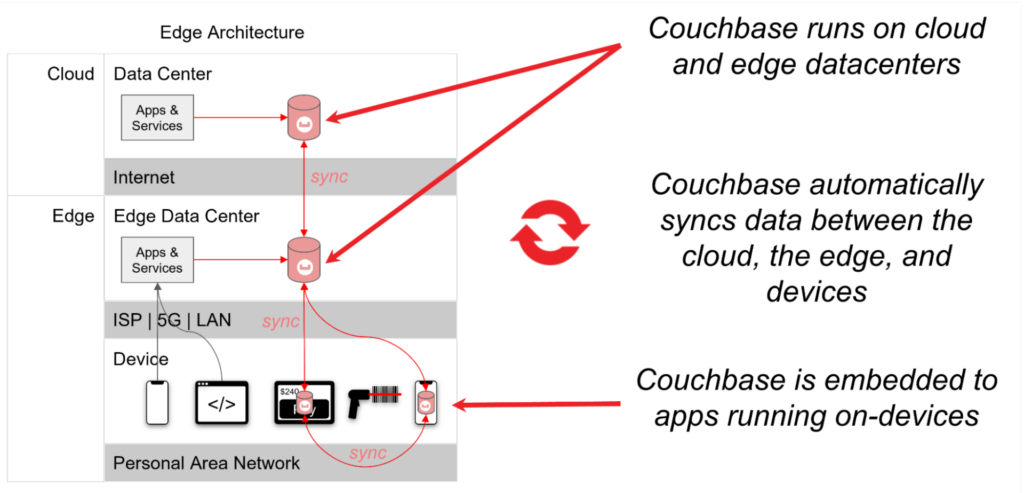

Couchbase is an in-memory, distributed JSON-document cloud database that supports SQL, search, eventing and analytics. The platform is used to powering large enterprise applications and can scale to millions of users with 24×365 uptime. It also provides unique support for edge computing as well as AI processing.

Couchbase can run in the cloud, at the edge and on devices, and it can sync data across these layers, achieving the edge computing advantages of speed, reliability, data governance and bandwidth efficiency for applications.

Couchbase is also able to integrate AI across the architecture through its ability to call models directly from the database and feed them with real-time operational data, whether it be in the cloud, at the edge or on a mobile device. These capabilities combine to make the “hybrid AI” concept a reality.

Let’s explore this in more detail.

Edge Native

The Couchbase Mobile product stack natively supports edge computing architectures by providing:

A cloud native database: Available as a fully managed and hosted Database as a Service with Couchbase Capella, or deploy and host Couchbase Server on your own.

An embedded database: Couchbase Lite is the embeddable version of Couchbase for mobile and IoT apps that stores data locally on the device. It provides full CRUD and SQL query functionality, and it supports all major platforms including iOS, OS X, tvOS, Android, Linux, Windows, Xamarin, Kotlin and more.

Data synchronization from the cloud to the edge: A secure, hierarchical gateway for data sync over the web, as well as peer-to-peer sync between devices, with support for authentication, authorization and fine-grained access control. Choose from fully hosted and managed data sync with Capella App Services, or install and manage Couchbase Sync Gateway yourself.

Couchbase Mobile’s built-in data synchronization connects the stack, syncing data between the backend cloud database and the embedded database running on edge devices as connectivity allows, while during network disruptions apps continue to operate due to local data processing.

With the Couchbase Mobile stack you can create multi-tier edge architectures to support any speed, availability or low bandwidth requirement.

AI Ready

Couchbase Mobile is not only designed for edge computing, it also supports AI from cloud to edge, including on devices.

Cloud AI with the Couchbase Analytics Service

Couchbase provides in-memory processing for hyper-fast responsiveness and a distributed architecture for scale and resilience, bringing the necessary speed, storage capacity and workload horsepower required for processing the huge amounts of data needed for training AI models.

It also provides a built-in Analytics Service, a feature that allows analysis of operational data without requiring it to be moved into a separate analytic system, eliminating the need for lengthy ETL (extract, transform, load) processes to run before data can be used for model training or analysis.

What’s more, even though Couchbase is a document database, data scientists work with it using a familiar language: SQL, complete with joins and aggregations. This allows them to write complex and highly contextual queries against massive datasets that return results in milliseconds or sub milliseconds, reducing time to insight and speeding up data prep and model training iterations.

The Couchbase Analytics Service offers a feature for integrating AI called Python User Defined Functions (UDF), which allows developers to bind Python code as a function and call it in SQL queries. This enables them to create a seamless pipeline, without tedious ETL processes, from Python-based machine learning models to Couchbase Analytics for applying AI at scale to things like sentiment analysis, predicting likely outcomes or classifying items based on operational data returned from analytics queries.

An example of using machine learning models with Couchbase Analytics can be found in this blog post.

With the Analytics Service, Couchbase fulfills the cloud side of the “hybrid AI” concept.

Edge AI with Couchbase Lite Predictive Query

While heavy-duty AI workloads can run efficiently at scale in the cloud with Couchbase, there are cases where running smaller models at the edge on mobile devices is desired, for example to do things like make recommendations based on local real-time data. And the increasing power of today’s mobile devices, as well as the emergence of mobile-optimized machine learning models, are making AI at the edge a reality.

In fact, Couchbase Lite’s Predictive Query API was designed for edge AI, allowing mobile apps to use pretrained machine learning models and run predictive queries against local on-device data. These predictions, made against real-time data captured by the mobile app, enable a range of compelling applications.

By applying machine learning models on the mobile device, recommendations and predictions are more personalized, can be instantaneous and have more impact thanks to the guaranteed high availability, ultra-low latency and reduced network bandwidth usage enabled by local data processing. In essence, it eliminates the need to send data back and forth over the internet to distant cloud data centers to be evaluated by AI, so it makes the entire experience faster and more reliable.

Examples of AI at the edge include retail and e-commerce, where on-the-spot item recommendations can be made based on images or photos; hospitality where personal preferences are matched with location, time, weather and other factors to recommend amenities in real time; or industrial manufacturing, where problems in high-speed factory processes can be predicted and mitigated instantly before a failure occurs.

To learn more about using machine learning models with Couchbase Lite, read this blog post .

With the Predictive Query API, Couchbase Lite fulfills the mobile device side of the “hybrid AI” concept.

AI from Cloud to Edge to Device

By combining Couchbase Mobile’s AI capabilities with its native support for edge computing architectures, organizations can layer AI-processing and data-processing workloads into any topology required for the fastest and most scalable integrated AI systems, all running on a standard database technology.

With this architecture, AI workloads are processed where it makes the most sense for a given model’s complexity and amount of data required. Deep learning training and the application of large models happen against immense amounts of data in the cloud, which offers the scale to efficiently train deep learning models and apply them en masse, while smaller machine learning models run at the edge directly on edge devices where they take advantage of local data for immediate engagement and interaction.

And the AI system also benefits greatly from being part of a single data technology ecosystem because it uses the same database, queries and APIs across the stack, making development and ongoing maintenance much easier than with a sprawling mashup of disparate database technologies.

Edge computing is a necessity for AI to realize its full promise today and in the future, and Couchbase’s edge native, AI-ready modern database is ready to help forge the next big advancement.

Learn more about Couchbase AI capabilities:

The connection between cloud-to-edge AI and a mobile database platform is truly intriguing! Your exploration of this synergy opens up exciting possibilities for improving mobile applications. The way you connect the dots between cloud-based AI capabilities and a mobile database platform showcases the potential for innovation and efficiency in the world of AI and mobile technology. Great insights into the evolving nature of AI and mobile tech!