Today we are pleased to announce three major advancements for Capella, the cloud database platform for modern applications, including GenAI, vector search, and mobile application services.

First, the general availability of Capella Columnar, which enables real-time, zero ETL JSON-native data analytics that run independently from operational workloads within a single database platform.

Also generally available is Couchbase Mobile with vector search, which makes it possible for customers to offer semantic, similarity and hybrid search in their applications at the edge that work even without internet.

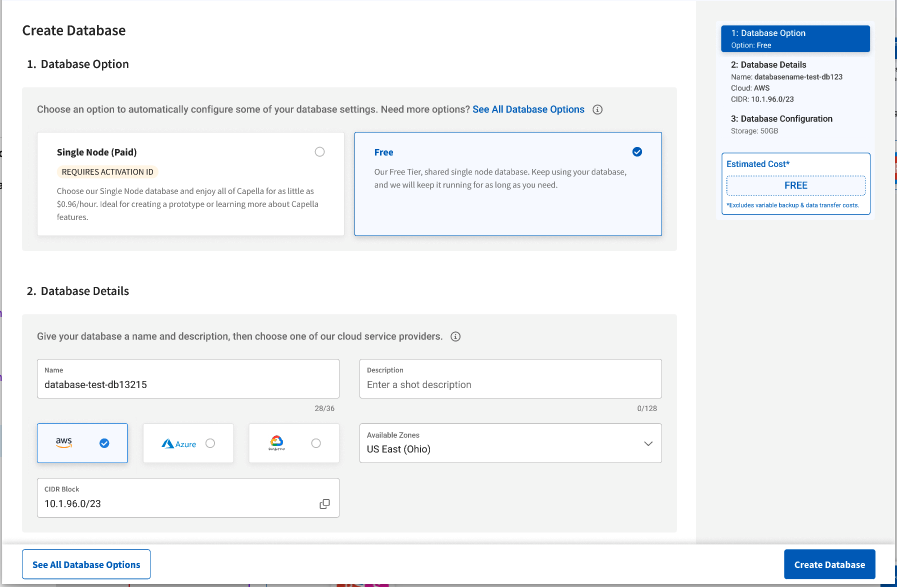

We also announced today Capella Free Tier, a perpetual free workspace in the Capella Database-as-a-Service designed to help developers get started on Capella without any financial commitment, making it ideal for learning, development, and small projects.

Capella Columnar Converges Operational and Real-time Analytics

Many organizations, including Couchbase customers, have embraced the flexibility of JSON when building business-critical applications. However, while JSON is often the programmer’s preferred data format, it can be difficult to use for traditional analytic systems that expect data to conform to more rigid structures. Without formal structures, business intelligence teams spend too much time on data transformation and hygiene just to include operational JSON data in their analysis. This is why so much JSON data remains dormant.

Couchbase is the only NoSQL database vendor who offers key-value and columnar storage options for operational and analytic workloads on a single platform, providing customers the power and flexibility to make JSON data useful in analytics.

Capella Columnar addresses the challenge of parsing, transforming and persisting JSON data into an analysis-ready columnar format. It supports real-time, multi-source ingestion of data not only from Couchbase, but also using common systems like Kafka to draw data from other third party JSON or SQL databases.

By using Capella iQ, our AI-powered coding assistant, Capella Columnar simplifies analysis by writing SQL++ for developers, eliminating the need to wait for the BI team. Once an important metric is calculated, it can immediately be written back to the Capella operational cluster, which can use the metric data within the application.

Capella Columnar reduces latency, complexity and cost to empower organizations to build real-time adaptive applications. For example, it can enable more personalized experiences in an e-commerce application so retailers can provide custom offers that enhance revenue, or build in customer-facing metrics in a gaming application to accelerate engagement. As AI enhances these applications, Capella Columnar positions Couchbase to meet the growing demand for high-performing, personalized and intelligent adaptive solutions.

-

-

-

- Join our upcoming webcast on Capella Columnar.

- See Capella Columnar in action in the following demo video:

-

-

Couchbase Mobile with Vector Search Enables Cloud-to-Edge AI

Search is a ubiquitous means of user interaction in mobile apps, and as such should always return personalized responses in context in order to make an impact. But searching only for specific words and phrases is not enough to produce accurate results that truly engage. To make a personal connection you need semantic search for matches that are meaningful to the user. This is where vector search comes in. Vector search goes beyond simply finding matching words, it finds related information based on the core meaning of the input, making it the best option for providing relevant information that connects with users.

Most other database vendors only offer vector search in the cloud, making the functionality subject to internet latency, and rendering it useless without connectivity – not good for mobile apps! What’s needed is a way to take advantage of vector search and AI at the edge without being dependent on the internet.

The release of Couchbase Mobile 3.2 completes our “cloud-to-edge AI” vision by offering vector search on-device with Couchbase Lite, the embedded database for mobile and IoT apps. Now, mobile developers can take advantage of vector search capabilities at the edge without dependencies on the internet, enabling the fastest, most secure and most reliable GenAI apps possible.

What’s more, vector search support in Couchbase Mobile makes output from generative AI more accurate by enabling Retrieval Augmented Generation, or “RAG”, an architectural technique where local on-device vector data is passed along with prompts to provide better precision and context for LLM responses. This allows developers to leverage RAG for GenAI to create personalized, engaging apps that work at the edge without compromising privacy

And the ability for vector search at the edge – including on-device – brings specific security and privacy benefits for AI-powered features. By processing data at the edge you ensure privacy because sensitive data never has to leave the edge. Developers can build GenAI apps that run on-device or at the edge without the worry of feeding sensitive or private data to public models.

All of these benefits are amplified when combined with vector search in Couchbase Capella and Couchbase Server, enabling cloud-to-edge AI support.

Use cases for vector search at the edge include:

-

- Retail: Embedded data and AI processing in store kiosks and POS apps enhances the checkout and item lookup process with vector search powered image matching, all with no dependencies on the internet.

- Mobile Gaming: Enhance gameplay with LLM-based non-player characters that interact more naturally with real players. On-device processing eliminates game downtime.

- Healthcare: Enable personalized apps that engage patients in context using local data. By processing data and AI on-device, patient information is kept confidential and private.

Other use cases include chatbots, fraud detection, predictive maintenance, and the list goes on!

-

- Read this blog to learn more about Couchbase Mobile vector search details and use cases.

- The following video explains the benefits of cloud-to-edge vector search with Couchbase Mobile 3.2:

Couchbase Capella Free Tier Speeds Learning and Adoption

With the introduction of Capella Free Tier, Couchbase is giving developers the time they need to learn Capella at their own pace, retain work and promote projects into test and production environments without concern for billing or expiration dates. With pre-configured cluster templates, ranging from one to five nodes, the Capella Free Tier simplifies learning, developing and deploying applications on Capella into production.

The Couchbase Capella free tier offers several benefits that make it an attractive option for developers and small projects:

No Cost: Developers start with Capella without any financial commitment, making it ideal for learning, development, and small projects.

Perpetual: The free tier is available in perpetuity without being encumbered by time limits so long as the database is actively used, making it suitable for exploration and evaluation of new features as they are available. This can also foster collaboration as developers can invite other users to work on and iterate over their projects as it evolves.

Optimized Developer Experience: Learning is a continuous journey. The perpetual nature of the free tier lends to that experience so users’ learning journey is not abruptly terminated after 30 days.

By unlocking complimentary access for developers to Capella DBaaS, Couchbase helps developers work faster to build adaptive applications.

Capella’s Free Tier is available starting on Monday, September 9.

Developer Productivity Advancements

To accelerate developer productivity, Couchbase Capella provides Capella iQ, the AI-powered coding assistant built into the Capella Workbench, as well as popular IDEs like Visual Studio Code and JetBrains. With iQ, Capella helps accelerate application development by enabling developers to issue plain language prompts that are turned into sophisticated SQL queries and starter code snippets.

Other new/updated resources that accelerate developer productivity include these plugins and extensions:

-

- Couchbase Shell (cbshell) v.1.0, now with vector search support.

- Couchbase Lite for Ionic cross-platform mobile application development.

- Ruby Couchbase Object Relational Mapping library (ORM).

There are also many new AI integrations for developers to leverage:

-

- LangChain Extensions:

- Semantic Cache (powered by vector search)

- Chat Message History Store (conversational cache)

- NVIDIA NIM/NeMo – accelerate the retrieval and the generation phases of Couchbase powered RAG pipelines using NVIDIA NIM/NeMo. Learn more in this blog.

- Langflow: Low code visual framework for building multi-agent and RAG applications using Couchbase as a vector store.

- Vectorize: Experiment and build a performant RAG pipeline to ensure that Gen AI applications and AI agents always have the most relevant context.

- LangChain Extensions:

Summary

Carl Olofson, research vice president at IDC, shares the benefits of these advancements:

Couchbase already provides a highly flexible data management capability by blending its base JSON document model with the ability to manage the data in a networked way. Now they have added blended analytic-transaction processing support that leverages the performance advantage of columnar data management together with vector search in support of applications demanding intelligent data access at the speed of business. These are capabilities that the market has been looking for but are hard to find contained in a single product.

With Capella Columnar and Couchbase Mobile vector search capabilities in one database platform, coupled with the ability to start with zero friction using Capella Free Tier as well as leverage AI integrations, Couchbase helps customers reduce costs and simplify operations, while enabling developers to create trustworthy, AI-powered adaptive applications that run from cloud to edge.

What Next?

-

- Join our Capella Columnar webcast

- Start using Couchbase Capella today

- Watch our demo video playlist: