LLM embeddings are numerical representations of words, sentences, or other data that capture semantic meaning, enabling efficient text processing, similarity search, and retrieval in AI applications. They are generated through neural network transformations, particularly using self-attention mechanisms in transformer models like GPT and BERT, and can be fine-tuned for domain-specific tasks. These embeddings power a wide range of applications, including search engines, recommendation systems, virtual assistants, and AI agents, with tools like Couchbase Capella™ streamlining their integration into real-world solutions.

What are LLM embeddings?

LLM embeddings are numerical representations of words, sentences, or other data types that capture semantic meaning in a high-dimensional space. They allow large language models (LLMs) to process, compare, and retrieve text efficiently. Instead of handling raw text directly, LLMs convert input data into vectors that cluster similar meanings closer together. This clustering enables contextual understanding, similarity search, and efficient knowledge retrieval for a wide variety of tasks, including natural language understanding and recommendation systems.

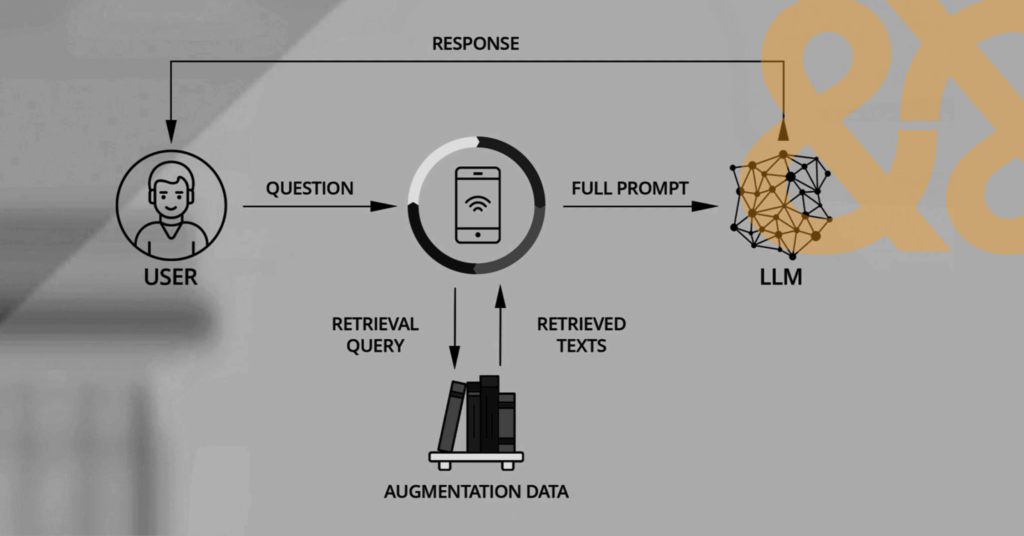

A typical application that helps build embeddings based on user input in preparation for use by an LLM

How do embeddings work?

LLMs create embeddings by passing text through layers of neural network transformations that map input tokens into vector space. These transformations capture syntactic and semantic relationships to ensure that words with similar meanings have closer vector representations. Transformer-based models such as GPT and BERT use self-attention mechanisms to assign contextual weight to words and refine embeddings based on surrounding words. By converting words into numerical form, embeddings allow for efficient similarity comparisons, clustering, and retrieval operations.

You can also fine-tune pre-trained embeddings for domain-specific applications to improve performance for specialized tasks like legal or medical document retrieval. To optimize output even further, you can use retrieval-augmented generation (RAG) to reference an additional knowledge base or domain before generating a response. Couchbase can help you build end-to-end RAG applications using vector search in tandem with the popular open source LLM framework LangChain.

Components of LLMs

LLMs consist of several key components that work together to generate embeddings and process text. These components collectively enable LLMs to capture deep linguistic relationships and produce meaningful embeddings:

-

- The tokenization layer breaks input into subwords or characters and converts them into numerical representations.

- The embedding layer transforms these tokens into high-dimensional vectors.

- The attention mechanism, particularly self-attention, determines how words influence each other based on context.

- The feedforward layers refine embeddings and generate output predictions.

- Positional encoding helps models understand word order to ensure coherent text processing.

Unimodal vs. multimodal embeddings

Unimodal embeddings represent a single data type, such as text, images, or audio, within a specific vector space. Text embeddings, for example, focus solely on linguistic patterns.

Multimodal embeddings integrate multiple data types into a shared space, allowing models to process and relate different modalities. Multimodal embeddings are crucial for applications like video captioning, voice assistants, and cross-modal search, where different data types must interact seamlessly. For example, OpenAI’s CLIP model aligns text and image embeddings to enable text-based image retrieval.

Types of embeddings

Embeddings vary depending on their structure and intended use:

-

- Word embeddings represent individual words based on co-occurrence patterns.

- Sentence embeddings encode entire sentences to capture broader contextual meaning.

- Document embeddings extend to longer text bodies.

- Cross-modal embeddings align different data types into a shared space to facilitate interactions between text, images, and audio.

- Domain-specific embeddings are fine-tuned on specialized datasets to enhance performance for areas like medicine or finance.

Each type of embedding serves different tasks, such as search optimization or content recommendation.

Use cases for LLM embeddings

LLM embeddings power a wide range of applications by enabling efficient text and data comparisons:

-

- Search engines improve relevance by retrieving documents with similar semantic meaning rather than just keyword matches.

- Chatbots and virtual assistants use embeddings to understand queries and generate context-aware responses.

- Recommendation systems use embeddings to suggest content based on user preferences.

- Fraud detection uses embeddings to help identify patterns in financial transactions.

- Code completion tools rely on embeddings to suggest relevant functions.

- Embeddings also enhance summarization, translation, and personalized learning platforms.

AI agents, which use GenAI to mimic and automate human reasoning and processes, are the hottest new use case for LLMs. Couchbase Capella’s AI Services help developers build AI agents faster by addressing many of the most critical GenAI challenges, including trustworthiness and cost.

How to choose an embedding approach

The best embedding approach for your project depends on the tasks you want to perform, the type of data you’re working with, and the level of accuracy you require. Pre-trained embeddings like BERT or GPT are effective for general language understanding, but if domain-specific precision is crucial, then you’ll want to fine-tune your embeddings on specialized datasets to enhance performance. Cross-modal tasks will require multimodal embeddings, while high-speed retrieval applications will benefit from dense vector search techniques like Faiss.

The complexity of your use case will determine whether a lightweight model will suffice or whether a deep transformer-based approach is necessary. You should also consider computational costs and storage constraints when selecting an embedding strategy that meets your requirements.

How to embed data for LLMs

Embedding data involves preprocessing text, tokenizing it, and passing it through an embedding model to obtain numerical vectors. Tokenization splits text into subwords or characters before mapping them to high-dimensional space. The model then refines embeddings through multiple layers of neural transformations.

Once generated, you can store embeddings for efficient retrieval or fine-tune them for specific tasks. Tools like OpenAI’s embedding API, Hugging Face Transformers, or TensorFlow’s embedding layers simplify the process. Post-processing steps, such as normalization or dimensionality reduction, improve efficiency for downstream applications like clustering and search.

For Couchbase’s customers who store JSON documents in Capella, we’ve eliminated the need to build a custom embedding system. Capella’s Vectorization Service accelerates your AI development by seamlessly converting the data into vector representations.

Key takeaways and next steps

LLM embeddings are a critical component of AI-powered applications such as search engines, virtual assistants, recommendation systems, and AI agents. They enable highly efficient text and data comparisons that drive meaningful outputs and excellent user experiences.

Couchbase Capella’s unified developer data platform supports popular LLMs and is ideal for building and running search, agentic AI, and edge apps that take advantage of LLM embeddings. Capella includes Capella iQ, an AI-powered coding assistant that helps developers write SQL queries, create test data, and choose the right indexes to reduce query times. You can get up and running on our free tier in minutes with no credit card needed.

FAQ

Do LLMs use word embeddings? LLMs use word embeddings, but they typically generate contextual embeddings rather than static word embeddings. Unlike traditional methods like Word2Vec, LLM embeddings change based on the surrounding context.

What are embedding models in LLM? Embedding models in LLMs convert text into high-dimensional numerical vectors that capture semantic meaning. These models help LLMs process, compare, and retrieve text efficiently.

What is an example of an embedding model? OpenAI’s text-embedding models (e.g., text-embedding-3-small and text-embedding-3-large) generate embeddings for search, clustering, and retrieval tasks. Other examples include BERT-based models and SentenceTransformers.

What is the difference between tokens and embeddings in LLM? Tokens are discrete units of text (words, subwords, or characters) that LLMs process, while embeddings are the numerical vector representations of those tokens. Embeddings encode semantic relationships that enable models to understand meaning.

Why do LLMs tokenize? Tokenization breaks text into smaller units so LLMs can efficiently process and generate embeddings. This allows the model to handle diverse languages, rare words, and different sentence structures.