Couchbase Lite’s Predictive Query API allows applications to leverage pre-trained, Machine Learning(ML) models to run predictive queries against data in embedded Couchbase Lite database in a convenient, fast and always-available way. These predictions can be combined with predictions made against real-time data captured by your app to enable a range of compelling applications. The Predictive Query API in Couchbase Lite is the first of its kind in an embedded database. We had announced the Developer Preview version of Predictive Query API with Couchbase Mobile 2.5 last year. With Couchbase Mobile 2.7, we are happy to announce the General Availability of this feature.

In this post, we provide an overview of the feature including context around why we built it and the kinds of applications that it can enable. We also demonstrate the use of the Predictive API with an example. You can refer to the documentation pages for specifics on the API and sample code snippets.

Machine Learning on Mobile – Motivation

Machine Learning has been traditionally been done in the cloud. With access to “unbounded” compute and storage resources, it seemed like the only viable place to do it. While that may be true for certain applications, there are a number of reasons to perform machine learning locally on mobile device.

Fast & Responsive

By avoiding the round trip time to the cloud, the response times to predictions can be guaranteed to be in order of a few milliseconds regardless of state of network connection. This is important as the responsiveness of an app is directly correlated to the end user experience which is important for app retention.

Always Available

By running machine learning locally on device, you can make predictions even in disconnected state. Your application and your data is always available. This is particularly relevant in case of field applications deployed in disconnected environments.

Data Privacy

Running predictions locally on the device implies that sensitive data does not have to ever leave your device. You can use this to build apps with highly personalized experiences without compromising on data privacy restrictions.

Bandwidth Savings

By avoiding the need for data to be transferred to the cloud, you can save on bandwidth costs. This is particularly important in case of network bandwidth constrained environments where data plans come at a premium.

All of the abovementioned benefits align well with the paradigm of edge computing where there is a growing need to bring storage and compute closer to the edge.

Mobile ML – Ecosystem

Mobile ML is a reality and there are several ecosystem enablers that make that possible.

Powerful Mobile Devices

Mobile devices are getting to be incredibly powerful. For instance, the recently launched iPhone11 and Pixel 4 include dedicated neural core engines. Innovation at the silicon layer make it feasible to run ML models locally on mobile devices. By taking advantage of platform hardware acceleration capabilities, the models can run in a performant manner.

Mobile Optimized Models

Machine Learning models have traditionally been strorage and compute intensive. There are several efforts/projects underway to optimize the models for resource utilization while maintaining required level of accuracy. There is a growing network of open-source pre-trained ML models such as MobileNet and SqueezeNet that are optimized to run on mobile devices.

Machine Learning Frameworks

All the major mobile platforms include support for Machine Learning frameworks and libraries that make it extremely easy for developers to integrate ML support into their apps. These frameworks leverage hardware acceleration and low level APIs that make it very performant. Examples include Core ML for iOS, Tensorflow lite for Android, ML.NET for Windows and ML Kit among others.

Creating ML Models

There is a growing number of open source tools and platforms such as TensorFlow and createML that make it easier than ever to create machine learning models. You don’t need to be a data scientist to create one.

Predictive Query API

Predictive Queries API enable mobile apps to leverage pre-trained machine learning(ML) models to make predictions on Couchbase Lite data. The API can be used to combine real-time predictions made on data input into the app in real-time with predictions on app data stored in Couchbase Lite.

By building prediction index during write time, users can get see significant improvements in performance of their queries. The prediction results can be materialized in Couchbase Lite and synced over to other clients via Sync Gateway.

Applications

There are several applications of Machine Learning on mobile including image classifications, voice recognition, object recognition and content-based recommender systems. Combining those predications with data that is stored in Couchbase Lite has the potential to revolutionalize day-to-day activities across several industries. All these applications benefit from running the machine learning locally viz., guaranteed high availability, faster response, efficient network bandwidth usage and data privacy. Here are a few examples-

Retail & e-commerce

Consider this workflow

– You walk into a store with a photo of an item of interest. For instance, a photo of a handbag that you saw on someone.

– You walk up to the sales clerk to enquire if the item is available.

– The sales clerk takes a photo of the item with his tablet that is running a catalog / inventory app

– The captured image is used to search the store catalog database to determine it’s availability.

– If it’s available in the store you are directed to the right aisle. You can also be presented with alternatives and recommendations on other related items.

Image based search augment the more traditional text-based search and even voice-based search experience which rely on the user’s ability to describe the item of interest. Applications with image based search can significantly improve both the online and in-store shopping experience. While the advantages of image based search are quite obvious in case of ecommerce applications, workflows such as the one described above provides an opportunity for traditional brick-and-motor stores to compete with online shopping experiences.

Behind the scenes, the catalog/inventory app uses an Image Classifier ML model to identify the item that is captured using the tablet camera. Once identified, the app looks up the local Couchbase Lite database to check if the item is available at the store and retrieves other relevant details such as if it is in stock and the aisle where it can be found. The images never leave the store app and can be locally deleted after use, alleviating any privacy concerns.

Hospitality

Consider a self-ordering kiosk at a fast food restaurant. Here is a typical workflow.

– You walk up to the kiosk equipped with a camera that captures your image (you have to opt in )

– The captured face image is used to lookup the database of registered patrons to identify your preferences

– The system can pull up order history, suggest meal orders, apply loyalty points and such. Your order can be placed using credit card info on file.

Self-ordering kiosks are fairly ubiquitous and have revolutionized the meal ordering experience by reducing wait times resulting in faster service. Equipping these kiosks with facial recognition technology can make that experience even more seamless and faster.

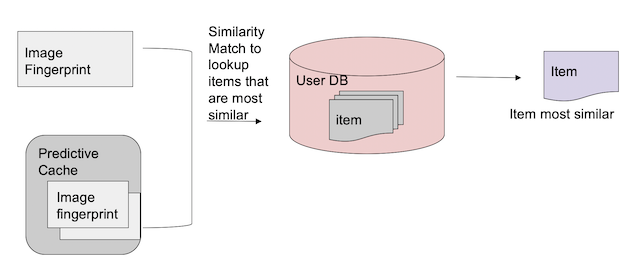

Behind the scenes, the kiosk is running an app that uses a Facial Recognition ML model such as OpenFace to generate a unique fingerprint of the captured face image. The app then performs a similarity match between the fingerprint of the captured face image and the fingerprints of images in Couchbase Lite database to identify the closest match.

Feature Walkthrough

The best way to understand how the API works is through an example. In this post, I am going to describe how you can implement Facial Recognition application discussed above using the Predictive Query API. This is probably the more complicated workflow. The image based search app using classifier model follows a similar pattern except that it is a lot simpler.

- Prerequisites

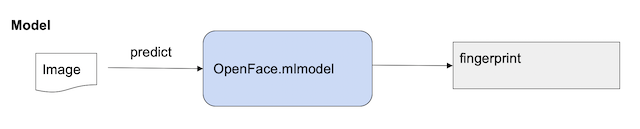

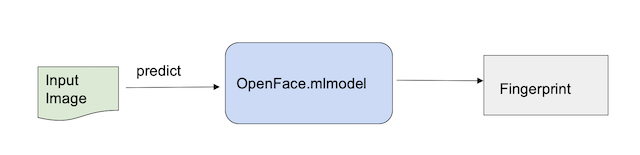

- Face Recognition ML model is available in the app. The ML model could have been bundled with the app or pulled down from an external repository, with techniques similar to this. The model takes in an image and outputs a “fingerprint” or “face embedding”, which is essentially a vector representation image features.

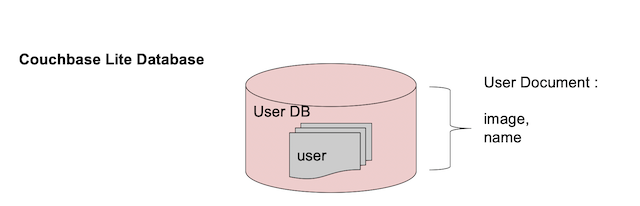

- Couchbase Lite database is populated with relevant data. In our use case, it would correspond to a registered user database with “user” type documents. Each user document includes a blob corresponding to a photo of registered user.

- Face Recognition ML model is available in the app. The ML model could have been bundled with the app or pulled down from an external repository, with techniques similar to this. The model takes in an image and outputs a “fingerprint” or “face embedding”, which is essentially a vector representation image features.

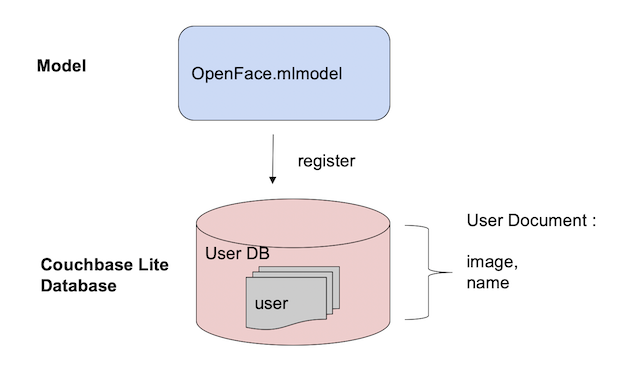

- Step 1 : Register the ML model with Cuchbase Lite

You can run predictions using any ML model. As an app developer, you will implement the PredictiveModel interface and register with Couchbase Lite. This interface is very straightforward and defines a single predict() method that’s to be implemented by the app developer. Anytime, the Prediction Function is invoked on Couchbase Lite, the underlying predict() method in the PredictiveModel is invoked.

|

1 2 |

// 1: Register Model Database.prediction.registerModel(mlModel, withName: modelName) |

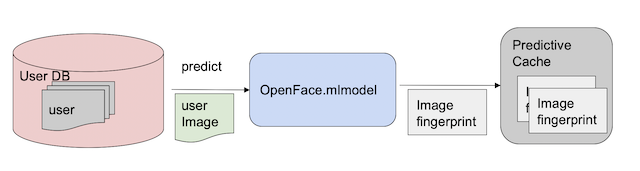

- Step 2 : Create Predictive Index

Create a predictive index on the images in Couchbase Lite database by running a prediction on all the images using the registered ML model. While this step is optional, it is highly recommended to create the index as it has a significant impact on query time performance

|

1 2 3 4 |

// 2: Build prediction index of predictions on images in database let index = IndexBuilder.predictiveIndex(model: modelName, input: imagePropertyInDB) db.createIndex(index, withName: "faceIndex") // Generate fingerprint by running predictions on images in database let fingerPrintOfImagesInDB = Function.prediction(model: modelName, input: imagePropertyInDB) |

- Step 3 : Prediction on captured image

Run prediction on image input into the app using Prediction Function

|

1 2 |

// 3: Generate fingerprint by running prediction on input image let fingerPrintOfInputImage = Function.prediction(model: modelName, input: inputPhoto) |

- Step 4 : Similarity Match & User document query

Run a similarity match between the captured image and images in the database using one the many distance vector functions. Query the Couchbase Lite database for documents with closest match

|

1 2 3 4 5 6 7 8 |

// 4: Find distance between fingerprints (similarity match of prediction results) let distanceBetweenImages = Function.cosineDistance(between: fingerPrintOfInputImage, and: fingerPrintOfImagesInDB) // Query for documents in database with closest match (as measured by distance comparison) let query = QueryBuilder.select(SelectResult.all()) .from(DataSource.database(db)) .orderBy(Ordering.expression(distance).ascending()) .limit(Expression.int(1)) |

That’s it! In just 4 simple steps, you can use the Predictive Query API to implement a facial recognition application based on data in Couchbase Lite.

What Next

With the new Predictive Functions API, Couhbase continues to showcase thought leadership in the area of mobile and embedded data storage. In this post, we discussed a few applications and walked through an example of how you would leverage the Predictive Query API. Hopefully that’s inspired you to create your own and we can’t wait to see the new features that you will enable in your apps with this capability.

Here are direct links to some helpful resources –

– Predictive Query Documentation.

Includes a step-by-step guide to use the API

– Couchbase Lite Downloads

Predictive Query API is available under Enterprise License. Our Enterprise Edition is also free to download and use for development purposes.

– Sample App

Sample app demonstrating use of Predictive API with a Classifier ML model

– Couchbase Mobile blogs

– Couchbase Forums

If you have questions or feedback, please leave a comment below or feel free to reach out to me via Twitter or email me

excellent blog and super useful capability withing CB Lite!