The traffic is coming from Couchbase cluster to the consumer on port 11210

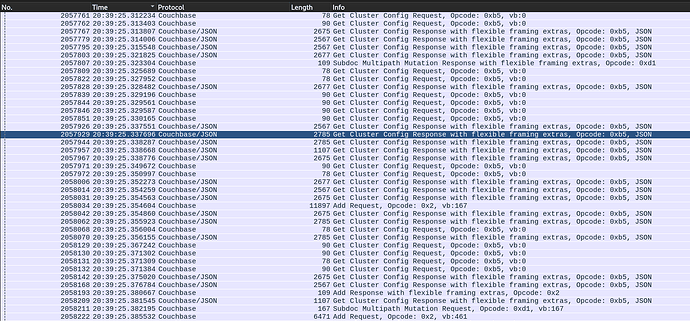

the traffic behave as a spike, flooding the network port of the consumer. Here is one example from one of the servers.

This is happening on multiple consumers from all the couchbase servers in the cluster

Also, something that is strange is that is happening on all the consumers in the same time at the same 30 minutes interval. Ttere is no cronjob/scheduler happening because there is a drift in the time this happening, one week ago was happening at 05 and 35 minute of every hour, and now is happening at 09 and 39 minute of every hour.

(a consumer is a client using the PHP SDK)

something that I see happening is that I am getting a lot of opcode=0x1 in the moment of the spike, and before the spike there is like almost 10 minutes of complete blank. not opcode=0x1 messages. and then, suddently a huge spike

[2025-04-08 09:58:13.232] 0ms [trac] [107,151] [0a7767-084c-674e-dcce-5857a83a666e78/64701a-19ab-004c-5ee1-768ad9e9d6592b/plain/bucket-redacted] <07.cb.redacted/ip-redacted:11210> MCBP recv {magic=0x82, opcode=0x1, fextlen=0, keylen=0, extlen=16, datatype=1, vbucket=0, bodylen=4897, opaque=0, cas=0}

above is the last request containing `opcode=0x1`

......

10 minutes of missing `opcode=0x1`

......

below is the first log containing `opcode=0x1`

[2025-04-08 10:09:17.204] 23ms [trac] [102,2228] [df6f79-3660-b14f-0488-6ef49e75045998/7f38bd-97da-db45-fa99-caa308280ab0a2/plain/bucket-redacted] <02.cb.redacted/ip-redacted:11210> MCBP recv {magic=0x82, opcode=0x1, fextlen=0, keylen=0, extlen=16, datatype=1, vbucket=0, bodylen=4897, opaque=0, cas=0}