Thank you @ingenthr for your reply.

I will review the documentation for ping() and attempt to leverage it and determine if that will remedy the issue.

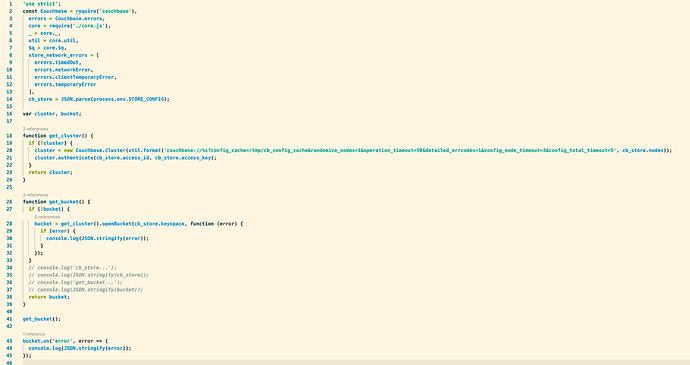

Before I provide the answers to your questions or provide additional context, etc., I want to be clear what it is I believe is needed at this point. The code snippet I have posted to this topic accurately illustrates our connection configuration strategy. Although, checking for an active connection is requisite, correctly reconnecting to a dropped connection is equally important. If the configuration I have posted here does not do both of these things the issue I have reported may be resolved by correcting or improving our current connection implementation.

To your questions;

Does this freeze/thaw sound plausible?

Response:

Its hard to say in this configuration. I can tell you with confidence we had solved all previous intermittent connectivity issues by following instructions available in Couchbase documentation, assistance provided in this forum, and advice and illustrations provided within numerous blogs and articles.

To be clear, we haven’t experienced “connection timeouts”, “…shutdown bucket”, or any other network or connectivity failures for some time. We have also successfully applied retry strategies where appropriate. The code above and the aforementioned improvements have resolved request failures related to “cold-starts” for some time. Connectivity has been very stable.

With connection-stability achieved, we focused our efforts on improving the response-time of requests made to idle serverless functions (“cold-starts”). Prior to implementing the recently provided AWS Lambda Provisioned Concurrency feature, responses rarely failed, even when they were slow.

Lambda Provisioned Concurrency was the logical choice to improve the performance of responses related to the cold-start (“freeze/thaw”) reality. It is this effort which spawned this topic.

Do you know of any way to detect it?

Yes. and we have. Unfortunately, it appears the nature of the “cure” is responsible for the “symptom” I am reporting here.

Lambda Provisioned Concurrency promises to ensure a configurable number of “warm” instances of the function are always available. It definitely appears to do just that. Unfortunately, connectivity to our cluster has been the challenge. Figuring out why we cannot maintain a stable connection, reconnect, or otherwise reinitialize a stable connection to our CB cluster is why where we are now.

I assumed given our connection configuration was to spec and appeared to be robust, we should not have issues connecting, reconnecting, or even re-initializing communications to CB from any active instance. Our connection logic has always resided in a separate “store.js” file “required” into each lambda file dependent upon the CB cluster.

We believe we have implemented our connection logic as soundly as recommended. If not, then we are seeking to learn what improvements or adjustments we need to make to it to allow a “warm” instance of a serverless function to work appropriately and robustly.